Mercor: Enduring AI Infrastructure or Cycle-Driven Winner?

A deep dive into Mercor’s rise and the key debates around its long-term defensibility

Introduction

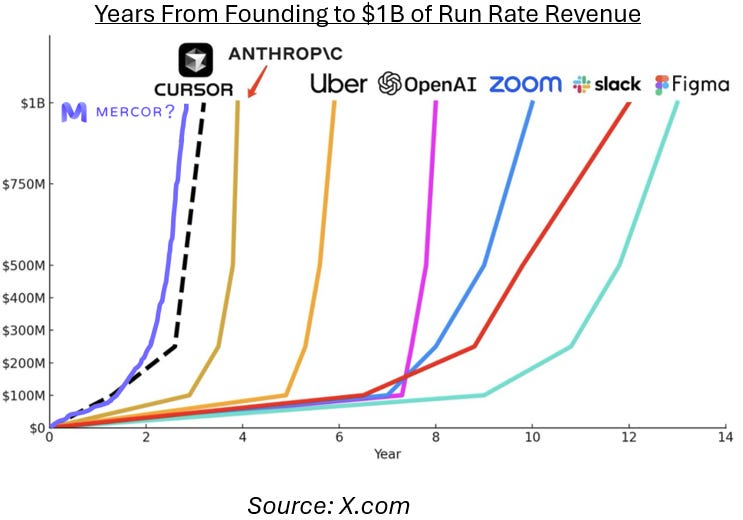

Of all the breakout companies in generative AI in 2025, few have been as meteoric and debated as Mercor. In just a single year, the company’s run rate revenue scaled from low double digit millions to over $850M, while its valuation increased 40x from $250 million to $10 billion, making its 22-year-old founders the youngest self-made billionaires in history.

Mercor’s rise coincides with a broader shift in AI development. As traditional scaling laws (the idea that more data and more compute lead to better models) began to plateau, frontier labs are increasingly turning toward post-training techniques where the quality of human feedback matters more than sheer scale. This article is a deep dive into Mercor’s business and explores the key Bull vs Bear debates surrounding whether the company is building an enduring platform or benefiting from a transient phase of the AI cycle.

Founding Story

Mercor was founded in 2023 by CEO Brendan Foody, CTO Adarsh Hiremath, and Surya Midha. The three met in high school at Bellarmine College Preparatory, an all-boys Jesuit high school in San Jose, California. After high school, they attended different universities (Foody and Midha went to Georgetown University, while Hiremath went to Harvard), but they remained close and eventually dropped out in 2023 to build Mercor full time.

The founders’ core insight was that the global talent market systematically overlooks highly capable engineers in developing countries because vetting them at scale is too labor-intensive. To solve this, Mercor built an AI-driven recruiting workflow that automates resume screening and conducts structured interviews, enabling high-fidelity evaluation of skills and reasoning at scale.

While Mercor’s earliest customers were small startups that couldn’t afford Bay Area engineer salaries, they quickly discovered strong product market fit with AI labs. As frontier models advanced, labs no longer needed “junior level” feedback. They increasingly require expert human judgment to support post-training and model evaluations to target the $40T knowledge work TAM. And with billions of dollars in capital behind them, AI labs were willing to pay for this expertise at scale, pulling Mercor toward the center of the post-training data stack.

Mercor’s Role in Model Training

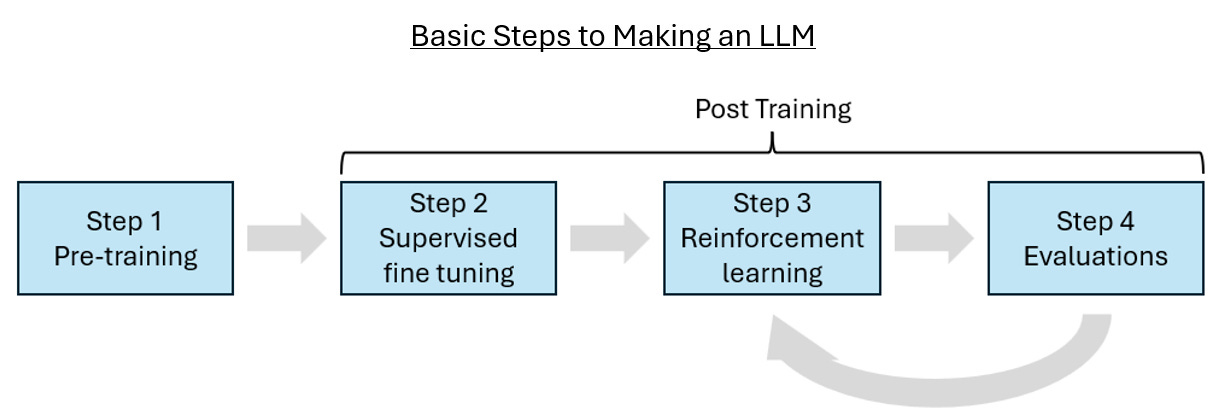

To understand where Mercor fits in the model development process, let’s imagine you are training a legal LLM:

Step 1 is pre-training: During pre-training, models ingest trillions of tokens of largely unstructured text to learn the statistical structure of language. Pre-training is a phase that relies on web scraping and massive compute power rather than human expertise. Mercor’s relevance at this point is pretty low.

Ex: At this point, a legal LLM will “know” case law facts, but won’t be able to “use” this fact in any meaningful work.

Step 2 is supervised fine tuning: While a pre-trained model can predict the next word, it does not yet reliably follow instructions. To address this, researchers train models on high-quality prompt–response pairs that demonstrate correct reasoning and desirable behavior. The model’s weights get adjusted based on how closely its predictions match the provided expert answers. Mercor supplies domain experts who generate these gold-standard examples.

Ex: At this point, if you ask the legal LLM to draft a term sheet, it will be able to give you a generic term sheet.

Step 3 is reinforcement learning: In this next step, the model develops taste. Here, models generate multiple responses to a prompt, which are then ranked by humans from best to worst. These rankings are used to train reward models that capture human preferences. As models grow more capable, identifying subtle errors and trade-offs requires increasingly sophisticated evaluators. Mercor is very important here because their network of specialists enables labs to apply expert judgment in domains where generalist annotators just wouldn’t know.

Ex: At this point, a legal LLM can draft higher-quality term sheets that account for deal-specific nuances.

Step 4 is Evaluations: Before a model gets released, it must pass a final test to measure its performance. If the model fails, then it may need to go back to prior steps for additional post-training. Mercor is highly relevant here because they supply the experts that create evaluation tests.

Ex: At this point, the model can do the work that a 1st year law associate can do with minimal hallucinations.

Mercor’s Business Model

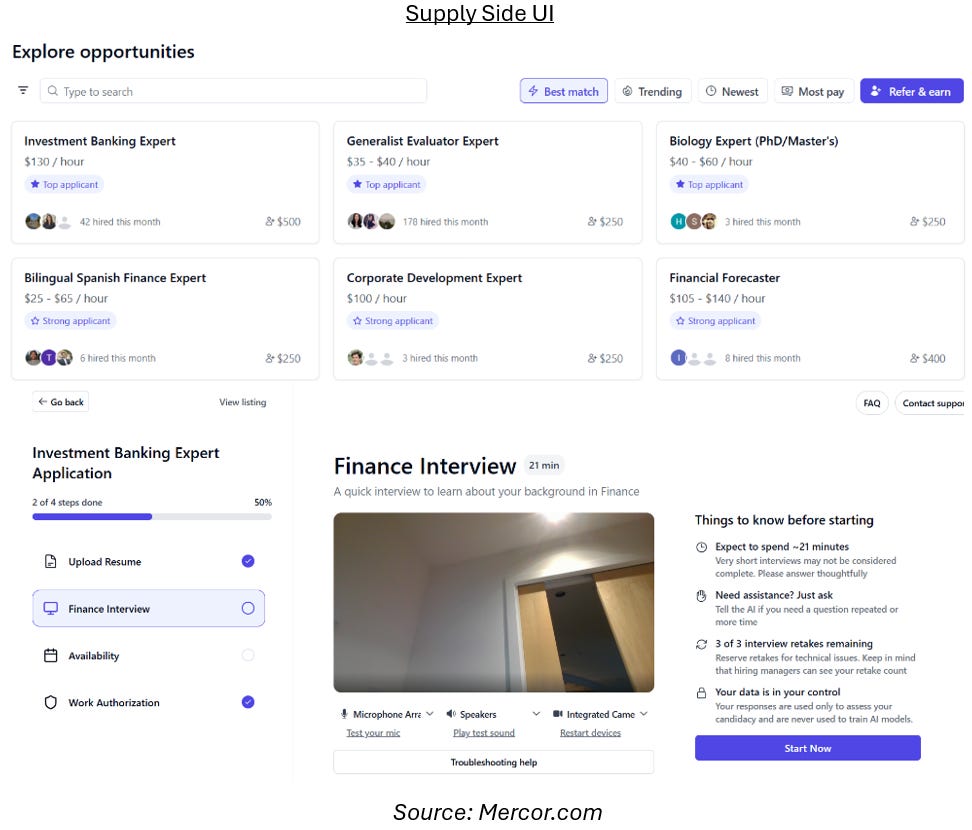

Mercor operates a two-sided marketplace connecting high-skill human labor with frontier AI labs. On the supply side, Mercor has built a network of ~30k+ domain experts, including consultants, lawyers, and scientists. These experts apply for projects through Mercor’s AI-driven recruiting platform, which automates screening and interviewing to enable hiring and deployment at scale.

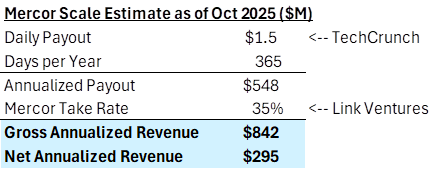

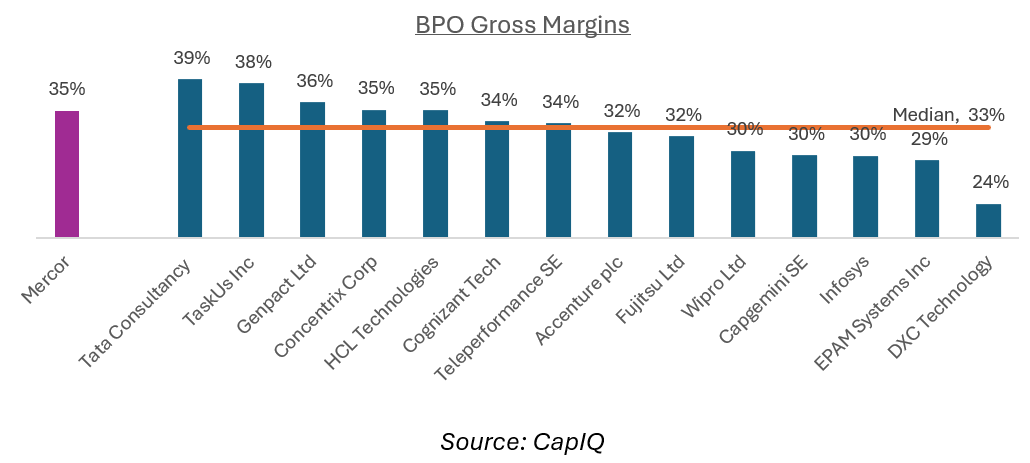

On the demand side, Mercor’s customers include leading AI labs such as OpenAI, Google DeepMind, and Meta. These customers use Mercor to source expert labor for a range of post-training tasks, including data labeling, rubric creation, and evaluation framework design. Mercor provides dashboards that allow labs to manage projects, monitor progress, and select experts based on domain and performance. By connecting supply and demand, Mercor earns a ~35% take rate on total gross payment volume. In its latest $10B fundraise announcement, Mercor shared they are paying out more than $1.5M per day. This implies ~$840M of run rate gross revenue as of October 2025, up from ~$75M in February 2025, for greater than a 10x in 8 months.

This type of growth is rare, and bulls will not be surprised if the company passes $1B of run rate gross revenue by the end of 2025.

Competitive Landscape

Mercor operates in a rapidly expanding but increasingly crowded market. As frontier labs scale post-training budgets into the single digit billions, competition is coming both from incumbents as well as new entrants, and across skill levels.

Low skill incumbents – Historically, the data labeling market was dominated by platforms built around low-skill, offshore labor paying <$10 an hour performing tasks such as image labeling, text classification, and speech tagging. These providers optimized for volume and cost rather than judgment and domain expertise. While effective for earlier generations of models, this segment has become increasingly irrelevant today. Take Appen (APX) for example: The company’s revenue has declined for 4 consecutive years and is expected to reach ~$155 million in 2025. In early 2024, their largest customer Google, which accounted for ~30% of their revenue, churned off. At its peak in 2020, the company was worth ~$4.5B market cap. Today, it is only worth ~$140M. The type of work Appen does can now increasingly be done faster and cheaper by LLMs. Other platforms such as Scale AI began in this lower-skill category, but successfully moved upmarket. Today, Scale pays ~$20 per hour for US-based generalists, and up to $150 an hour for certain domain experts to evaluate models on instruction following and RLHF. However, following Meta’s strategic investment in June 2025, Scale has faced customer hesitation from other frontier labs due to concerns around platform neutrality. This has pushed incremental volume to players such as Mercor. Additionally, AI labs do not view Scale as a leader in providing high skilled talent. Rumors suggest that even Meta’s data acquisition teams have been shifting share away from Scale in favor of competitors. The company is reportedly crossing $2B of run rate revenue by the end of 2025.

Higher skilled competitors – Newer platforms like Surge and Micro1 were built explicitly around high-skill labor from day one. Micro1 is Mercor’s closest analogue in focusing on matching high-skilled labor to labs, whereas Surge works with the talent directly and supplies best-in-class data sets and evaluations. Surge reported crossing $1B in run rate revenue in mid 2025 without raising any external capital.

New entrants – Beyond purpose-built labeling platforms, adjacent marketplaces are beginning to enter the space by repurposing existing labor networks. As a college recruiting platform, Handshake has a base network of 18M students and alumni that it’s tapping into for AI data labeling work. They released their Handshake AI product in June 2025. Similarly, Uber released Uber AI Solutions in June 2025.

Synthetic data – Finally, synthetic data represents a longer-term competitive threat. Models are already capable of generating and labeling large volumes of low-complexity data. For example, Midjourney models train on Midjourney outputs. However, it remains an open question whether synthetic approaches can fully replace high-skill human judgment.

Bull vs Bear Debate

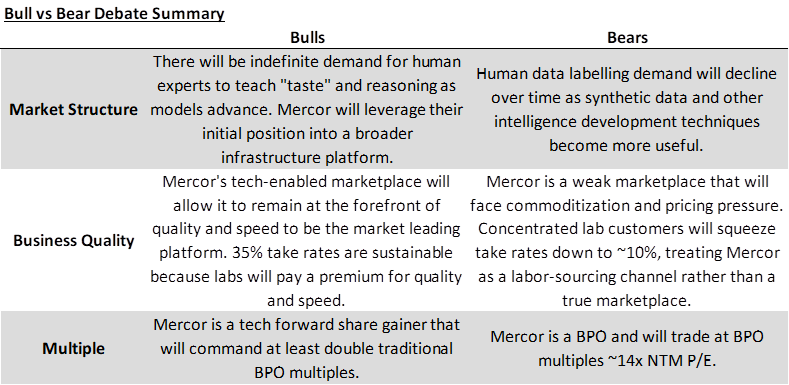

Mercor has become one of the most polarizing companies in the AI ecosystem. The growth trajectory of the company is undeniable, and Mercor bulls expect the company to use this wedge into customers to develop a broader infrastructure platform to become a permanent layer in the AI stack. However, Mercor bears question the durability of demand for human data and the overall business quality.

What the Bulls See:

Rapidly growing market – Bulls argue that as AI models expand into increasingly complex forms of knowledge work, demand for high-skill human input will continue to grow. As scaling laws around data and compute flatten, post-training has become the primary lever for improving model performance. Furthermore, the type of work expected from models shifted from pattern recognition (Is this a cat?) to specialized knowledge work reasoning (What arguments should one use in a court case?). All of this is driving rapid growth in demand for expert knowledge for post-training. Synthetic data on its own will never be good enough, and there will be unlimited vectors of human intelligence that models will need human in the loop post-training for. As a marketplace for expert knowledge, Mercor has significantly benefited from this tailwind. Furthermore, by deeply ingratiating itself to customers, Mercor will earn the right to cross-sell other products such as RL environments, agentic workflow evaluations, and more to become the infrastructure for future work.

Differentiated product offering – Bulls believe that Mercor will be able to maintain a differentiated product vs competitors. By automating screening and interviewing, Mercor is building a compounding network effect where the highest quality and quantity of global experts reside on a single platform. This will allow them to have high wallet share with customers and support a durable take rate of ~35%, in line with BPO medians, and protect the company from the margin compression currently hitting offshore low-skilled labor competitor. As AI training evolves into a permanent infrastructure need similar to ongoing employee development, Mercor’s role as the trusted “recruiting and training platform” ensures sustainable, premium margins.

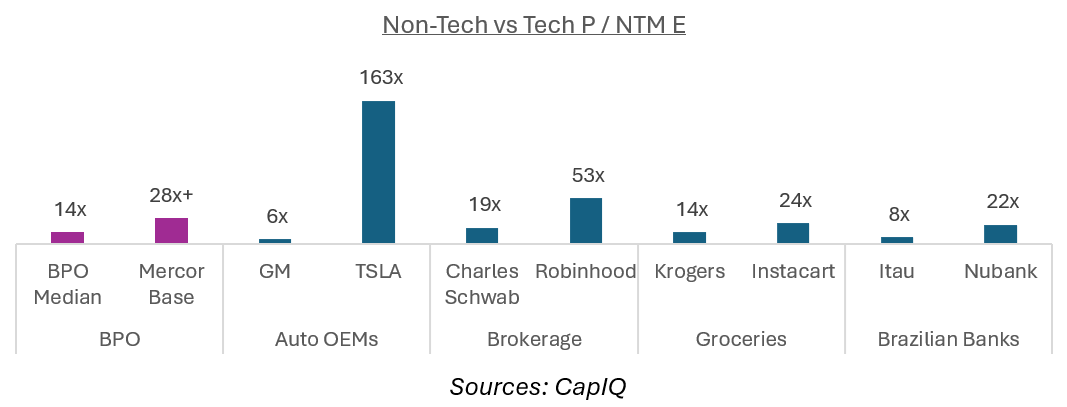

High multiple – Finally, bulls believe Mercor should trade at a premium to traditional BPOs. Traditional PBOs trade at ~14x NTM P/E. However, in other industries, the tech forward business frequently trades at north of 2X the multiple of their non-tech peers. Mercor is a tech enabled, share gaining platform, which should support a premium multiple.

What the Bears See

Market durability uncertainty – Bears argue that the current reliance on high-skill human labor is a transitional rather than permanent state in the path to intelligence. If you believe AGI is imminently close, or that advancements in intelligence will come from other techniques, then eventually there will be no need for human data. Reinforcement learning environments made with software (rather than reinforcement learning from human feedback) are already starting to catch on as a popular post-training tool. Additionally, market anecdotes suggest Anthropic’s Constitutional AI reportedly required only a limited number of human examples to align, with AI self-generating the remainder. If the future equilibrium is a state where the type of data AI models use is no longer human-dependent, then the value of a human recruiting platform vanishes.

Low business quality – Bears also question the underlying business quality. As research labs mature and start to focus more on costs, they may start to squeeze Mercor’s take rate.

Demand side concentration: Mercor works with a relatively concentrated group of AI lab customers. This makes them more like a labor sourcing channel, rather than a true marketplace. This is bad for Mercor’s bargaining power and value prop as a marketplace. For example, Instacart is not as powerful of a marketplace as Uber because their grocery store supply base is relatively concentrated. Partially as a result, Instacart’s take rate on GMV is only ~10%, compared to Uber’s at ~27%. Mercor’s take rate ~35% is not far from where BPO gross margins sit today ~34%. However, BPOs have a fragmented customer base. Unless Mercor provides a differentiated service that competitors cannot, I would not be surprised if their take rate declined in the future as more competitors enter.

Supply / demand multi-homing: Additionally, both the supply and demand side tend to use multiple platforms. This lack of exclusivity limits the power of middlemen like Mercor because both sides can shift activity towards better prices.

Competition from incumbents and new entrants: As outlined above, Mercor faces an increasingly thick competitive landscape.

Low multiple – Bears believe that at its core, Mercor is a services business that is more similar to BPOs than technology companies. As a result, in public markets, it is more likely to be valued on earnings, rather than revenue.

Conclusion

As the AI race for intelligence continues in the near term, capital inflow to human data labeling and post-training is likely to grow. In that environment, Mercor has emerged as a key player. Through interview automation, it delivers high quality at speed and scale. Its growth reflects a powerful market shift from volume to judgment, and from low-skill labeling to high-skill human feedback. The open question remains: What role will human data play in how models reach the next level of intelligence? If the answer is large, then Mercor is well-positioned to be a leading provider.

But if the answer is small, can Mercor adapt to a different context? Scale AI avoided becoming Appen not by staying in commodity labeling, but by continuously pivoting toward higher-skill work. Alexandr Wang’s prescience was in evolving the company as the market moved up the skill curve. For Mercor, I’d want to see the company expand its product suite beyond selling units of labor towards alleviating other bottlenecks customers face in advancing intelligence. Whether the Mercor team can demonstrate this adaptability by continuing to reinvent itself as definitions of “expert” shift will determine if Mercor becomes a durable platform, or a remarkable beneficiary of this phase of the AI race.

Special thank you to Shangda Xu, Palak Goel, and Will Quist for inspiration and feedback.

Pure gold. This is best deep dive I've seen into Mercor. Really enjoyed the mix of product, tech, and financial analysis.

I've met with a few employees here, and the sheer talent + hustle Mercor attracts is no joke.

Dropped a follow!

Insanely solid read